The Eyes Don't Lie: Detecting Deepfakes Through Iris Analysis

NOTE: This blog post is a summarization of the work I did at the University of Illinois Urbana-Champaign (UIUC) in 2022. While these techniques are obsolete in the current age of generative AI, some of the findings still hold true and offer a unique perspective on how deepfakes were caught back in the early days (albeit only a few years ago!).

Abstract

For decades, visual media has been trusted by the general population as being a reliable source of information. While video manipulation was always possible, it took significant time and effort by experts to modify a video frame-by-frame to fool the viewer. With recent develop- ments in machine learning, it’s possible to generate deepfakes in near real time from a single image of the victim. This causes issues in identity verification where a person can assume the identity of the victim for political or financial gain. In this work I explore the concept of using eyes in the detection of deepfakes utilizing a novel combination of temporal iris analysis through Recurrent Neural Networks. We evalu- ate our method against the DFDC-P dataset which contains thousands of generated deepfakes. Quantitative analysis of our method shows it achieves comparable results to the latest deepfake detection models.

The Growing Threat of One-Shot Deepfakes

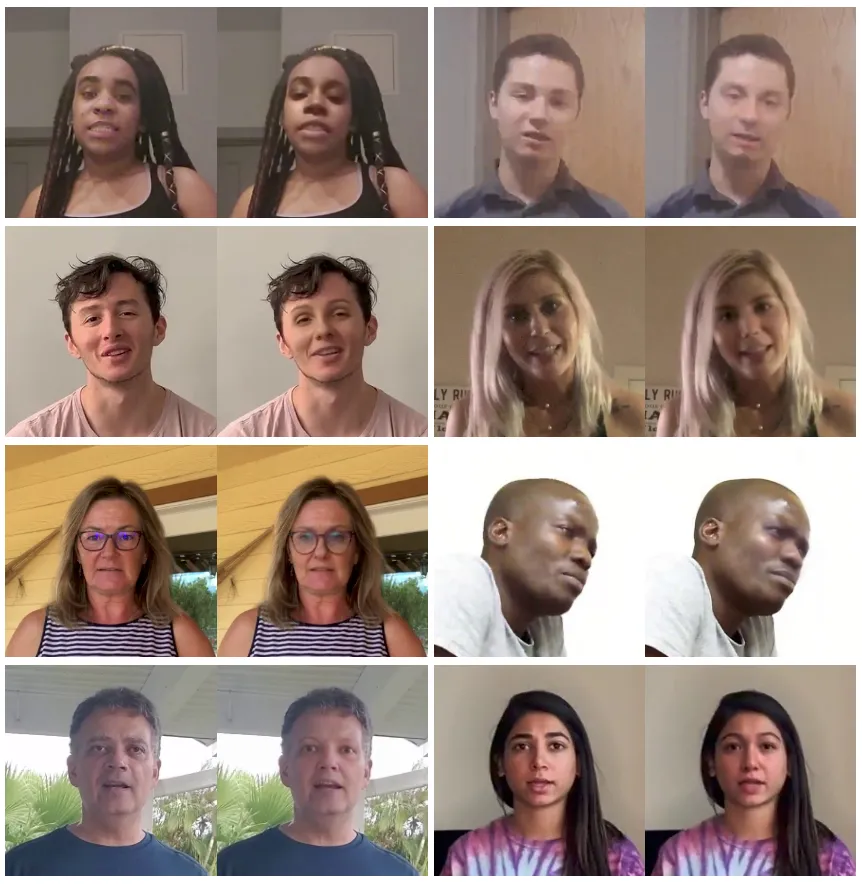

Traditional deepfake creation required hundreds or thousands of images of a target person. This limitation meant that only public figures with extensive online presence were vulnerable. That’s no longer the case.

Modern “one-shot” deepfake generators like SimSwap and tools like the Deepfake Offensive Toolkit (dot) can create convincing impersonations from a single photograph. Within minutes, anyone with basic technical knowledge can assume someone else’s identity on video—bypassing facial recognition systems and automated identity verification.

The implications are scary: financial fraud through video-based authentication, political misinformation, and personal harassment have all become dramatically easier to execute.

A Novel Approach: Looking Into the Eyes

While major tech companies pour resources into broad deepfake detection methods, recent research from the University of Illinois explores a more targeted approach: temporal iris analysis.

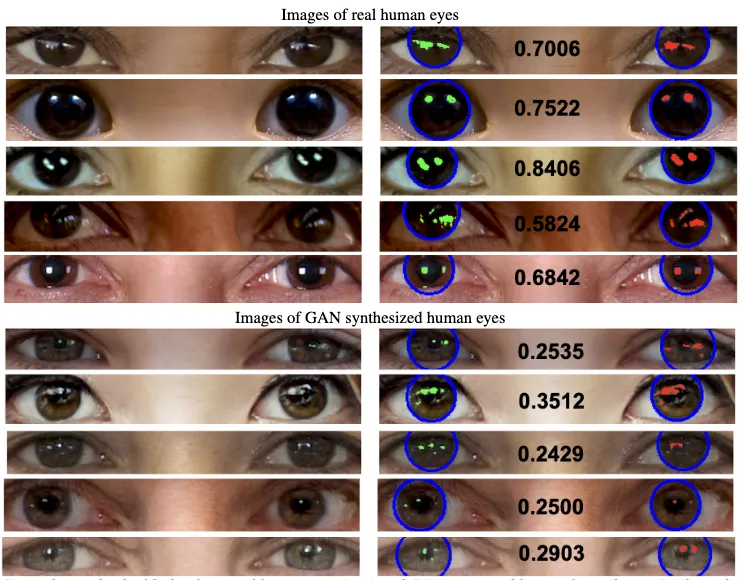

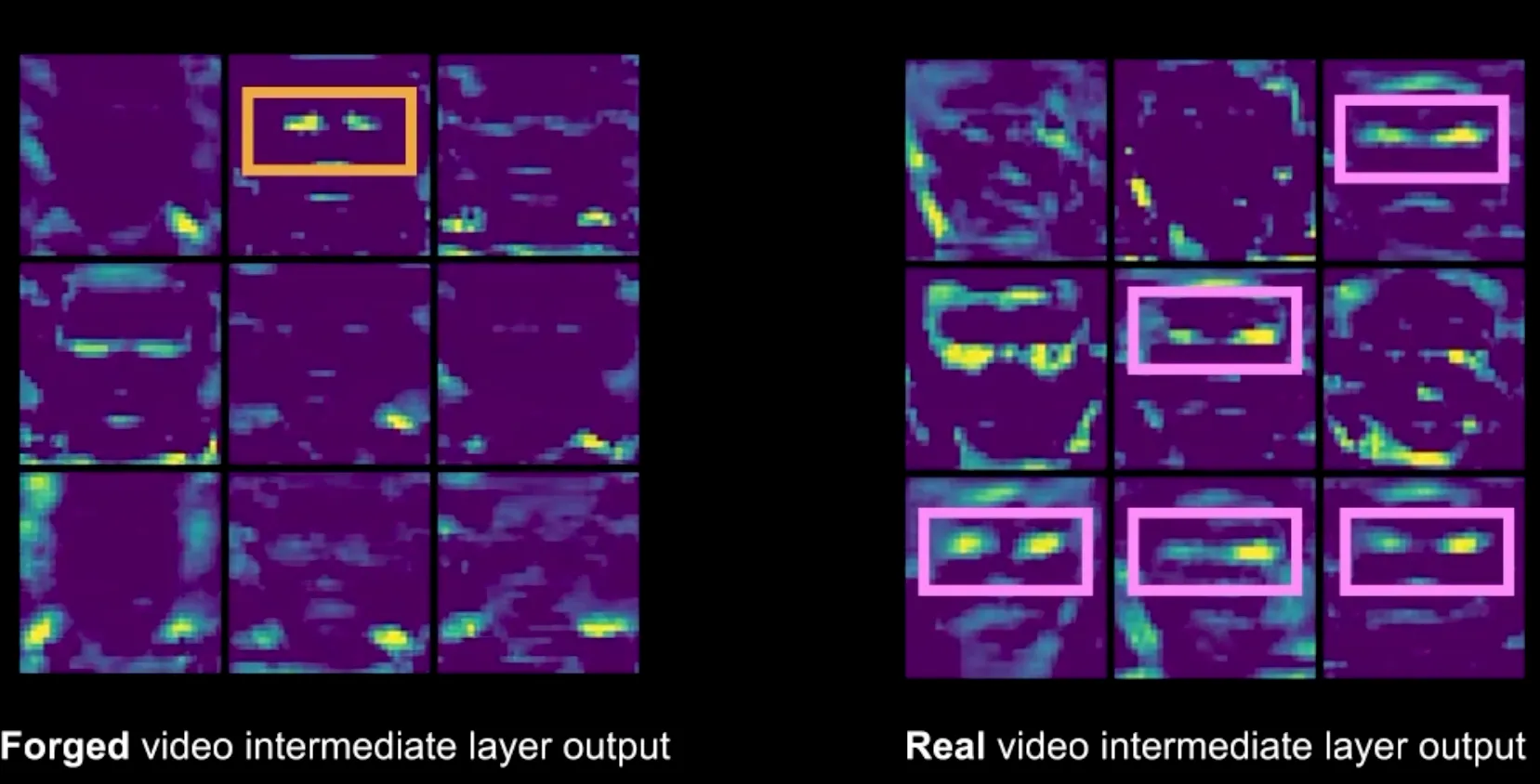

The core insight is elegant. Generative Adversarial Networks (GANs)—the technology powering most deepfakes—consistently struggle to render believable eyes. Prior research on GAN-generated faces revealed that synthetic eyes exhibit inconsistent corneal specular highlights, with significantly lower consistency scores compared to real human eyes.

This weakness persists in deepfake videos. By cropping frames to focus exclusively on the eye region and analyzing how this region changes across time, researchers can detect telltale artifacts that betray manipulation.

How the Detection Works

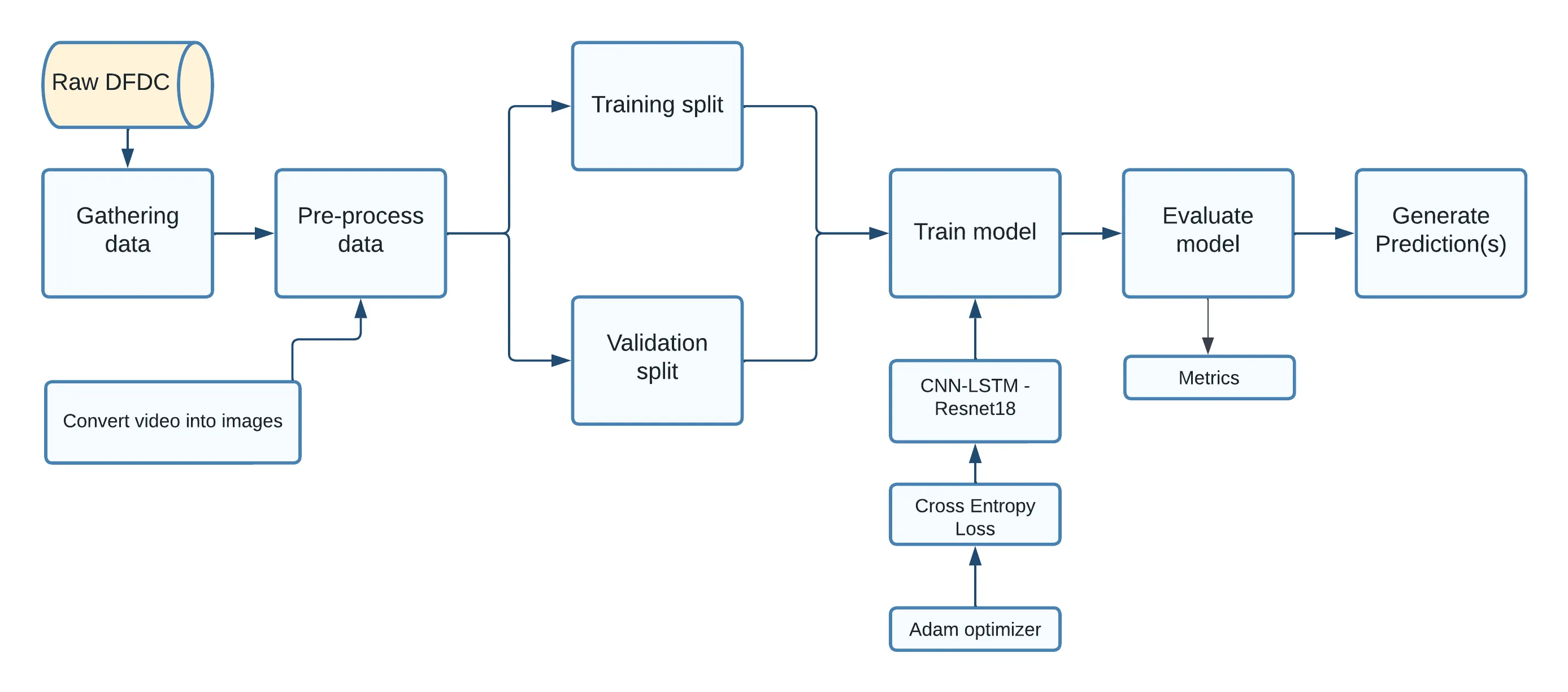

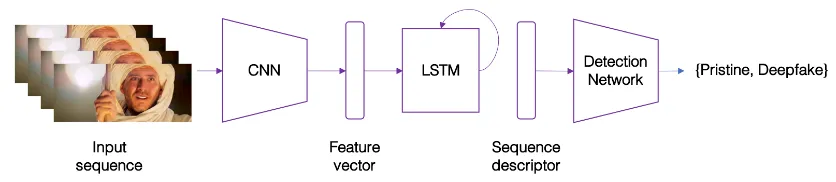

The detection pipeline combines two powerful neural network architectures:

Convolutional Neural Networks (CNNs) extract visual features from each iris-cropped frame. Using a pre-trained ResNet50 model, the system identifies subtle patterns and artifacts that distinguish real eyes from generated ones.

Long Short-Term Memory networks (LSTMs) then analyze these features across multiple frames, capturing temporal inconsistencies. Deepfake generators process video frame-by-frame without awareness of previous frames, creating subtle discontinuities that accumulate over time.

The preprocessing involves extracting frames from videos, using Google’s MediaPipe to locate facial landmarks, and cropping to isolate the eye region. This focused approach reduces noise and trains the model on the most discriminative features.

Below are examples showing the preprocessing stages for a real video frame versus a deepfake frame. Notice how the artifacts become more apparent when focusing on the iris region:

| Stage | Real | Fake |

|---|---|---|

| Full Frame |  |  |

| Face Crop |  |  |

| Iris Crop |  |  |

Validating the Approach

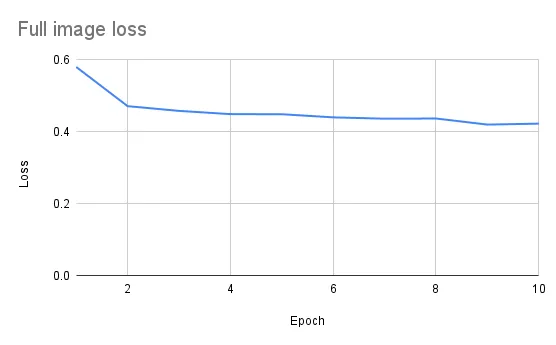

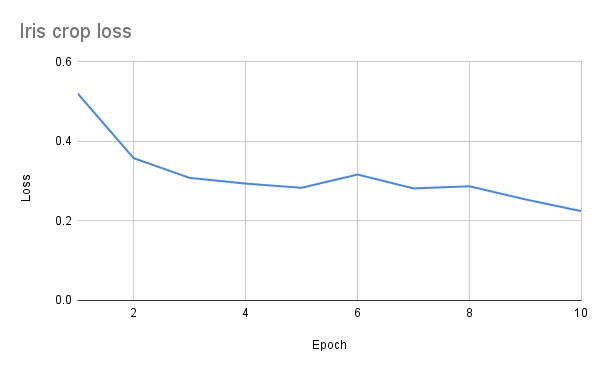

Initial experiments comparing iris-cropped versus full-image training showed promising results. A simple CNN trained on iris crops achieved 90.1% accuracy, compared to just 80.2% for full images on the same dataset subset.

When evaluated against the Deepfake Detection Challenge Preview dataset—a benchmark containing over 4,000 deepfakes across 66 unique individuals—the CNN-LSTM iris analysis method achieved an AUC score of 0.71, comparable to established methods like XceptionNet and Face Warping detection.

| Method | AUC Score |

|---|---|

| Head Pose Analysis | 0.55 |

| 2-Stream | 0.61 |

| CNN-LSTM-Iris-Crop | 0.71 |

| XceptionNet | 0.72 |

| Face Warping | 0.72 |

| MesoNet | 0.75 |

| Appearance and Behavior | 0.93 |

Why Eyes Are the Weak Point

The research reinforces findings from MesoNet, a custom network designed for deepfake detection. When researchers visualized which image regions MesoNet weighted most heavily, the eyes stood out prominently—the network had independently learned to focus on eye regions as the most reliable indicator of manipulation.

At a fundamental level, GANs face challenges rendering eyes convincingly:

- Lighting consistency: Real eyes exhibit coherent specular highlights that match the scene’s lighting. GANs frequently produce mismatched or impossible reflections.

- Temporal coherence: Natural eye movements follow predictable patterns. Frame-by-frame generation disrupts this continuity.

- Fine detail: The iris’s intricate patterns and the subtle interplay of pupil, sclera, and surrounding skin push the limits of current generative models.

Limitations and the Road Ahead

The iris analysis approach excels against real-time, one-shot deepfakes—exactly the type of attack targeting automated verification systems. However, it faces challenges with heavily post-processed deepfakes where human editors have manually corrected visible artifacts.

The research also revealed issues with existing benchmark datasets. Some videos in the DFDC-P dataset exhibited mapping errors where the source identity wasn’t properly transferred, instead producing sharpened versions of the original face. These inconsistencies complicate model training and evaluation.

Future directions include combining iris analysis with behavioral detection methods—analyzing head movements, facial expressions, and other patterns that deepfakes struggle to replicate authentically. The most successful detector in benchmark tests, the “Appearance and Behavior” method, achieved its 0.93 AUC score precisely by incorporating these holistic signals.

The Broader Context

Deepfake detection represents an ongoing arms race. As detection methods improve, so do generation techniques. Yet understanding why certain approaches work—like the consistent weakness of GAN-rendered eyes—provides foundational knowledge that remains valuable even as specific techniques evolve.

For organizations relying on video-based identity verification, the message is clear: automated systems need layered defenses. Iris analysis offers one promising component of that defense, particularly effective against the rapid, low-effort attacks that one-shot deepfake tools enable.

The eyes, it turns out, really are windows—not just to the soul, but to the truth of whether we’re seeing a real person or a digital fabrication.